AI Is Forcing a Rethink of the Entire Software Stack

Introduction

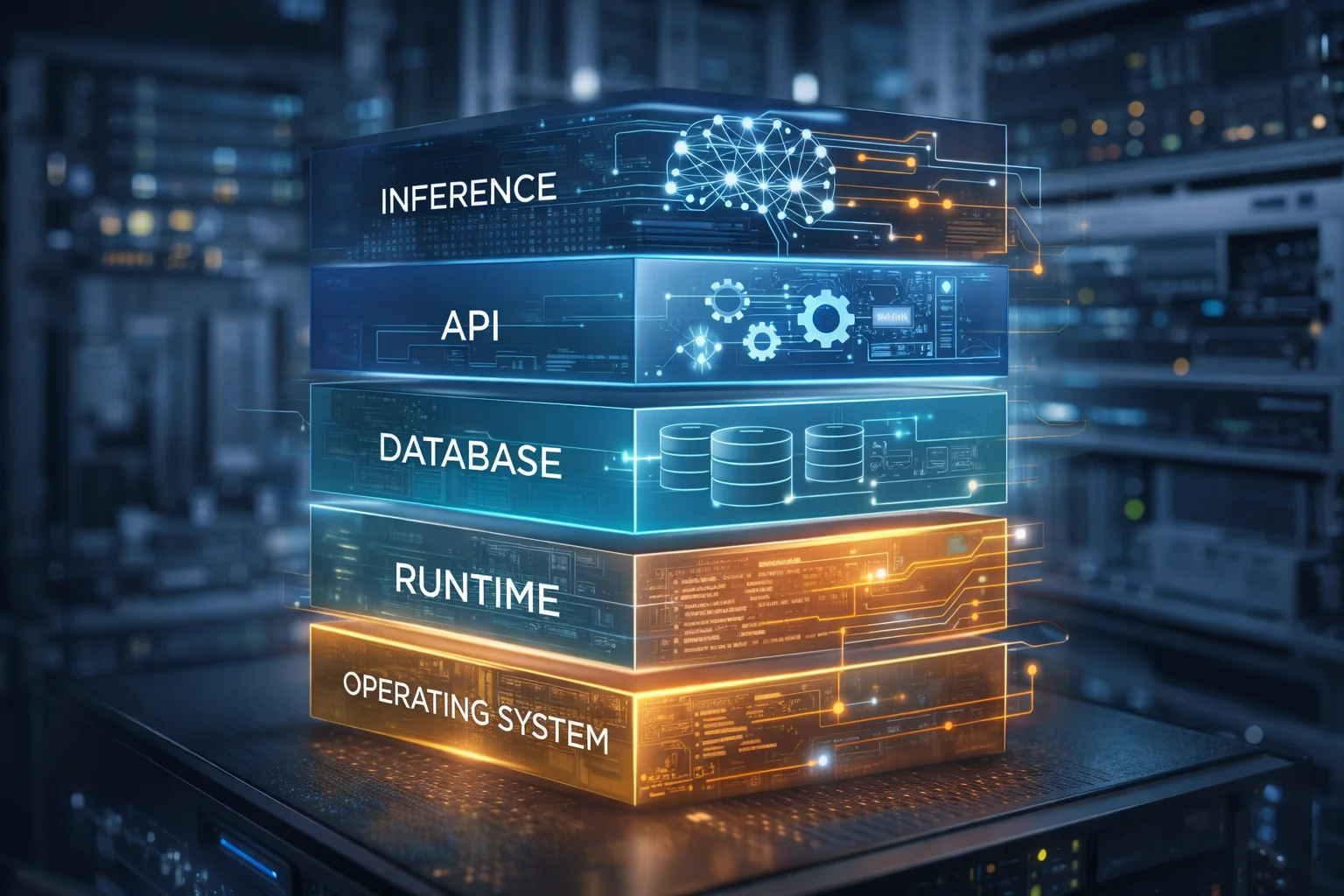

Artificial intelligence workloads are fundamentally altering how software systems are designed, deployed, and operated. Unlike traditional applications that process deterministic inputs through predictable code paths, AI systems introduce probabilistic computation, massive parameter sets, and inference patterns that challenge conventional software architecture. This shift is compelling enterprises to reconsider every layer of their technology stack, from hardware abstraction to application interfaces.

The transformation extends beyond simply adding AI capabilities to existing systems. Companies are discovering that AI workloads require different approaches to memory management, networking, storage, and compute orchestration. These requirements are driving changes in operating systems, middleware, databases, and application frameworks. The result is an emerging AI software stack architecture that looks markedly different from traditional enterprise software platforms.

Background

Traditional software stacks evolved around CPU-centric architectures optimized for sequential processing and predictable resource consumption. Applications typically followed request-response patterns with known memory footprints and processing times. Infrastructure could be sized based on concurrent user loads and peak transaction volumes.

AI workloads break these assumptions. Training large language models requires coordinating computation across thousands of GPUs, managing multi-terabyte datasets, and handling dynamic memory allocation patterns that can shift during execution. Inference workloads introduce different challenges: unpredictable latency requirements, varying computational intensity based on input complexity, and the need to serve multiple model versions simultaneously.

Current enterprise software stacks struggle with these patterns. Linux schedulers optimized for fairness across CPU processes perform poorly when managing GPU-intensive workloads. Traditional databases cannot efficiently handle the vector operations and similarity searches that AI applications require. Network protocols designed for small, frequent messages struggle with the large tensor transfers between distributed AI components.

These mismatches have prompted fundamental questions about software architecture. Major cloud providers are developing specialized AI operating systems. Database vendors are rebuilding storage engines around vector operations. Application frameworks are incorporating inference engines as first-class components rather than external services.

Key Findings

Operating System Layer Adaptations

The operating system layer faces the most immediate pressure from AI workloads. Traditional CPU schedulers cannot effectively manage mixed workloads where some processes require exclusive GPU access while others need fine-grained CPU sharing. Google's development of specialized schedulers for Tensor Processing Units demonstrates this challenge. Their approach involves bypassing standard Linux scheduling for AI workloads, using dedicated resource managers that understand GPU memory hierarchies and inter-device communication patterns.

Memory management represents another critical adaptation. AI models often require loading multi-gigabyte parameter sets into GPU memory, while simultaneously managing host memory for data preprocessing and result postprocessing. Standard virtual memory systems cannot efficiently handle these patterns. NVIDIA's Unified Memory system and AMD's HIP memory management represent early attempts to address this, but operating system kernels increasingly need native support for heterogeneous memory architectures.

Container orchestration systems like Kubernetes have added GPU scheduling capabilities, but these additions reveal deeper architectural limitations. The assumption that containers represent isolated, stateless compute units breaks down when dealing with AI workloads that may need to maintain model state across requests or coordinate across multiple nodes for distributed inference.

Database and Storage Layer Evolution

AI applications require fundamentally different data access patterns than traditional enterprise applications. Vector databases have emerged as a distinct category because standard relational databases cannot efficiently perform the similarity searches and nearest-neighbor operations that AI applications require. Companies like Pinecone and Weaviate have built specialized storage engines optimized for high-dimensional vector operations.

However, the challenge extends beyond specialized databases. AI training workloads generate massive amounts of intermediate data that must be stored and retrieved efficiently. Traditional file systems optimized for small files and metadata operations struggle with the large, sequential data patterns common in AI workloads. Distributed file systems like Lustre and parallel file systems designed for high-performance computing are finding new applications in AI infrastructure.

The emergence of model registries and artifact stores represents another evolution in storage architecture. Unlike traditional application deployments where code and configuration are relatively static, AI applications must manage multiple model versions, track model lineage, and coordinate model updates across distributed inference systems. MLflow and similar platforms represent purpose-built solutions, but they highlight how AI workloads require different approaches to versioning and deployment.

Network and Communication Layer Changes

AI systems require different networking patterns than traditional distributed applications. Model parallelism involves splitting large models across multiple devices, requiring high-bandwidth, low-latency communication between GPU nodes. This pattern differs significantly from the request-response communication typical in web applications or the message passing common in microservices architectures.

InfiniBand networks, traditionally used in high-performance computing, are becoming standard for AI infrastructure because standard Ethernet cannot provide the bandwidth and latency characteristics required for efficient model parallelism. NVIDIA's NVLink and similar technologies represent hardware-level solutions, but they require corresponding changes in network software stacks.

The communication patterns also affect higher-level protocols. gRPC and similar frameworks optimized for small message passing struggle with the large tensor transfers common in distributed AI systems. Specialized communication libraries like NCCL (NVIDIA Collective Communication Library) have emerged to handle these patterns, but they operate alongside rather than within traditional networking stacks.

Application Framework Integration

Application frameworks are incorporating AI capabilities as native components rather than external services. FastAPI and similar frameworks now include built-in support for model serving and inference pipelines. However, this integration reveals architectural challenges around resource management and scaling.

Traditional web application frameworks assume stateless request processing where each request can be handled independently. AI inference often requires loading large models into memory, creating a tension between efficient resource utilization and response latency. Some frameworks cache models in memory, accepting higher memory usage for lower latency. Others dynamically load models, trading latency for resource efficiency.

The batch processing patterns common in AI training also challenge traditional application architectures. Frameworks designed around real-time request handling struggle to efficiently manage the large batch jobs typical in model training and bulk inference operations.

Implications

The architectural changes driven by AI workloads have significant implications for enterprise technology strategy. Organizations cannot simply add AI capabilities to existing infrastructure without considering the broader system impacts. The specialized hardware requirements, different resource utilization patterns, and novel communication needs affect procurement, staffing, and operational processes.

Cost structures shift dramatically in AI-centric architectures. Traditional enterprise software environments can often be sized based on user concurrency and transaction volumes, providing predictable capacity planning. AI workloads introduce highly variable resource consumption patterns where inference costs depend on model complexity, input characteristics, and quality requirements. This variability complicates both technical architecture and financial planning.

Operational complexity increases across multiple dimensions. AI systems require new monitoring approaches that track model performance alongside traditional system metrics. Model drift, inference accuracy, and training convergence become operational concerns that require specialized tooling and expertise. Traditional DevOps practices must evolve to encompass MLOps requirements around model versioning, deployment, and rollback procedures.

Security models also require reconsideration. AI models themselves become valuable assets that require protection, but they also introduce new attack vectors through adversarial inputs and model extraction techniques. Traditional security frameworks focused on data and application protection must expand to cover model integrity and inference security.

The talent implications extend beyond hiring data scientists. Infrastructure teams need to understand GPU architectures, specialized networking requirements, and the operational characteristics of AI workloads. Development teams must learn to work with probabilistic systems where testing and debugging require different approaches than deterministic applications.

Considerations

Several factors complicate the transition to AI-optimized software stacks. The rapid pace of hardware evolution means that software architectures optimized for current GPU generations may become inefficient as new hardware arrives. The specialized nature of AI infrastructure creates vendor lock-in risks, particularly around hardware-specific optimization libraries and communication protocols.

Performance optimization in AI systems often requires deep integration between hardware and software layers, making it difficult to maintain the abstraction layers that enable operational flexibility in traditional systems. Organizations must balance optimization for specific AI workloads against maintaining general-purpose capabilities for existing applications.

The cost implications of AI-optimized infrastructure can be substantial. Specialized hardware, high-bandwidth networking, and the compute resources required for AI workloads represent significant capital investments. Organizations must carefully evaluate whether their AI use cases justify these infrastructure costs or whether cloud-based AI services provide more cost-effective alternatives.

Integration challenges arise when organizations need to operate both traditional and AI-optimized infrastructure simultaneously. Hybrid approaches require sophisticated resource management and orchestration capabilities to efficiently utilize both types of infrastructure while maintaining operational simplicity.

The evolving nature of AI software stacks also creates technology selection risks. Early adoption of specialized AI infrastructure may result in stranded investments as the technology landscape continues to evolve rapidly. Organizations must balance the benefits of optimized AI infrastructure against the risks of premature standardization on emerging technologies.

Key Takeaways

• AI workloads require fundamentally different software architecture patterns than traditional enterprise applications, driving changes across every layer of the technology stack from operating systems to application frameworks.

• Memory management, scheduling, and resource allocation mechanisms designed for CPU-centric workloads struggle with the GPU-intensive, mixed-precision computation patterns common in AI systems.

• Vector databases and specialized storage systems are becoming necessary components as AI applications require different data access patterns than traditional relational database workloads.

• Networking and communication layers must adapt to handle the high-bandwidth tensor transfers and collective communication patterns required for distributed AI computation.

• Traditional DevOps practices require extension to MLOps capabilities that address model versioning, deployment, monitoring, and lifecycle management as operational concerns.

• The transition to AI-optimized software stacks introduces new cost structures, operational complexities, and talent requirements that affect enterprise technology strategy beyond just AI initiatives.

• Organizations must carefully balance the benefits of specialized AI infrastructure against integration challenges, vendor lock-in risks, and the rapid evolution of the underlying technology landscape.